Images from the lamp exchange/soup conversation at Nostic Palace in Prague.

Speaking at Prague College and Academy of Fine Arts in Prague this week

Joelle and I are speaking at Prague College and the Academy of Fine Arts in Prague (Akademie výtvarných umění v Praze) this week about our individual work and the project we are doing at the Nostic Palace. Many thanks to artists Molly Radecki and Natalia Vasquez for assisting us with The Difference Between Now and Then.

Rozdíl mezi teď a potom

Scrapy in process

How to install Scrapy with MacPorts (full version)

+

+

Here is a step-by-step explaining how I got Scrapy running on my MacBook Pro 10.5 using MacPorts to install Python and all required libraries (libxml2, libxsit, etc.). The following has been tested on two separate machines with Scrapy .10.

Many thanks to users here who shared some helpful amendments to the default installation guide. My original intention was to post this at stackoverflow, but their instructions discourage posting issues that have already been answered so here it is…

1. Install Xcode with options for command line development (a.k.a. “Unix Development”). This requires a free registration.

2. Install MacPorts

3. Confirm and update MacPorts

$ sudo port -v selfupdate

4. “Add the following to /opt/local/etc/macports/variants.conf to prevent downloading the entire unix library with the next commands”

+bash_completion +quartz +ssl +no_x11 +no_neon +no_tkinter +universal +libyaml -scientific

5. Install Python

$ sudo port install python26

If for any reason you forgot to add the above exceptions, then cancel the install and do a “clean” to delete all the intermediary files MacPorts created. Then edit the variants.conf file (above) and install Python.

$ sudo port clean python26

6. Change the reference to the new Python installation

If you type the following you will see a reference to the default installation of Python on MacOS 10.5 (Python2.5).

$ which python

You should see this

/usr/bin/python

To change this reference to the MacPorts installation, first install python_select

$ sudo port install python_select

Then use python_select to change the $ python reference to the Python version installed above.

$ sudo python_select python26

UPDATE 2011-12-07: python_select has been replaced by port select so…

To see the possible pythons run

port select --list python

From that list choose the one you want and change to it e.g.

sudo port select --set python python26

Now if you type

$ which python

You should see

/opt/local/bin/python

which is a symlink to

/opt/local/bin/python2.6

Typing the below will now launch the Python2.6 shell editor (ctl + d to exit)

$ python

7. Install required libraries for Scrapy

$ sudo port install py26-libxml2 py26-twisted py26-openssl py26-simplejson

Other posts recommended installing py26-setuptools but it kept returning with with errors, so I skipped it.

8. “Test that the correct architectures are present:

$ file `which python`

The single quotes should be backticks, which should spit out (for intel macs running 10.5):”

/opt/local/bin/python: Mach-O universal binary with 2 architectures

/opt/local/bin/python (for architecture i386): Mach-O executable i386

/opt/local/bin/python (for architecture ppc7400): Mach-O executable ppc

9. Confirm libxml2 library is installed (those really are single quotes). If there are no errors it imported successfully.

$ python -c 'import libxml2'

10. Install Scrapy

$ sudo /opt/local/bin/easy_install-2.6 scrapy

11. Make the scrapy command available in the shell

$ sudo ln -s /opt/local/Library/Frameworks/Python.framework/Versions/2.6/bin/scrapy /usr/local/bin/scrapy

One caveat for the above, on a fresh computer, you might not have a /usr/local/bin directory so you will need to create it before you can run the above to create the symlink.

$ sudo mkdir /usr/local/bin

13. Finally, type either of the following to confirm that Scrapy is indeed running on your system.

$ python scrapy

$ scrapy

A final final bit… I also installed ipython from Macports for use with Scrapy

sudo port install py26-ipython

Make a symbolic link

sudo ln -s /opt/local/bin/ipython-2.6 /usr/local/bin/ipython

An article on ipython

http://onlamp.com/pub/a/python/2005/01/27/ipython.html

ipython tutorial

http://ipython.scipy.org/doc/manual/html/interactive/tutorial.html

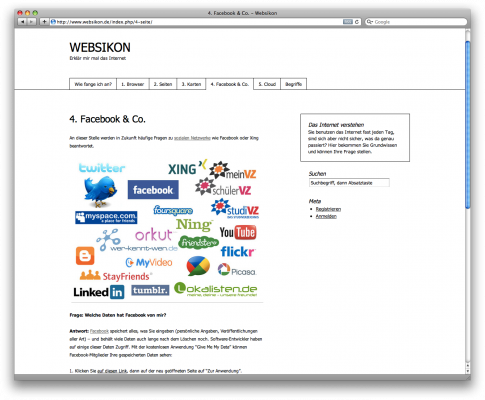

Give Me My Data Anleitung in Deutsch…

This lovely website has posted instructions in German for using Give Me My Data. Vielen Dank! English version

Was weiß Facebook über mich?, in Bild

The German newspaper, Bild published another article mentioning Give Me My Data today.

Was weiß Facebook über mich? or in English, Facebook knows what about me?

“About 500 million people worldwide use the social network Facebook to stay in touch with friends. In Germany, almost 9.8 million people are registered with Facebook. Many users are worried about their privacy. BILD.de answered the important questions…”

The Difference Between Then and Now

TINA-B Festival

Nostic Palace, Czech Ministry of Culture, Prague

October 7-24th, 2010

In the October 2010 TINA-B Contemporary Art Festival in Prague, Owen Mundy and Joelle Dietrick will re-stage their 2006 project The Darkest Hour is Just Before Dawn. Originally developed in York, Alabama, USA, Owen Mundy and Joelle Dietrick borrowed lamps from the residents and installed them in an abandoned grocery store. Each lamp was set to turn on every night, and because of the inexactitude of the timers chosen, did so in an organic fashion, one by one, reflecting not only the participants in the community, but also the history of Alabama’s social movements. In an area where a nearby hazardous waste landfill caused the water undrinkable, the artists and the community collectively revived the vacant commercial space, removing roomfuls of damaged post-Katrina FEMA water boxes and transforming the downtown with the lamps, pulsing at their own pace, human in the imperfections and variety, and more powerful as a collection.

As if a scientific study with controls, the re-staging of the project in Prague and Venice studies the nature of site-specific and community-based art. Both cities provide unusual cross-cultural comparisons about domestic settings and the cultural, geographical and political structures that affect private space. The 2006 installation developed before the U.S. housing crisis, and these 2010 installations will develop as the global economy still recovers from the impact of the current economic downturn. In this context, the simple gesture of gathering of everyday objects and spaces can yield unusual insights into common assumptions about micro-macros shifts—the individual and the state, private spaces and public concerns, local and global.

Setup Macports Python and Scrapy successfully

“Scrapy is a fast high-level screen scraping and web crawling framework, used to crawl websites and extract structured data from their pages. It can be used for a wide range of purposes, from data mining to monitoring and automated testing.”

But, it can be a little tricky to get running…

Attempting to install Scrapy on my MBP with the help of this post I kept running into errors with the libxml and libxslt libraries using the Scrapy documentation.

I wanted to try to let Macports manage all the libraries but I had trouble with it referencing the wrong installation of Python. I began with three installs:

- The default Apple Python 2.5.1 located at: /usr/bin/python

- A previous version I had installed located: /Library/Frameworks/Python.framework/Versions/2.7

- And a Macport version located: /opt/local/bin/python2.6

My trouble was that:

$ python

would always default to the 2.7 when I needed it to use the Macports version. The following did not help:

$ sudo python_select python26

I even removed the 2.7 version which caused only an error.

I figured out I needed to change the default path to the Macports version using the following:

$ PATH=$PATH\:/opt/local/bin ; export PATH

And then reinitiate the ports, etc.

Finally, I was not able to reference the scrapy-ctl.py file by default through these instructions so I had to reference the scrapy-ctl.py file directly

/opt/local/Library/Frameworks/Python.framework/Versions/2.6/bin/scrapy-ctl.py

UPDATE

A quick addendum to this post with instructions to create the link, found on the Scrapy site (#2 and #3).

Starting with #2, “Add Scrapy to your Python Path”

sudo ln -s /opt/local/Library/Frameworks/Python.framework/Versions/2.6/bin/scrapy-ctl.py /opt/local/Library/Frameworks/Python.framework/Versions/2.6/lib/python2.6/site-packages/scrapy

And #3, “Make the scrapy command available”

sudo ln -s /opt/local/Library/Frameworks/Python.framework/Versions/2.6/bin/scrapy-ctl.py /usr/local/bin/scrapy

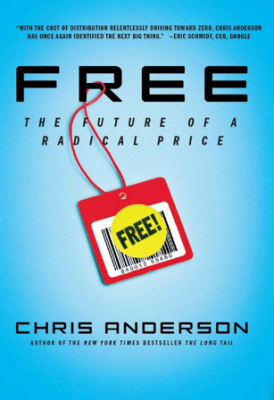

Reading list for August 2010

About to embark on some new projects here in Berlin. Here’s my reading list at the moment…

Free: The Future of a Radical Price

by Chris Anderson

July 7th 2009 by Hyperion

Traditional economics operates under fundamental assumptions of scarcity–there’s only so much oil, iron, and gold in the world. But the online economy is built upon three cornerstones: processing power, hard drive storage, and bandwidth–and the costs of all these elements are trending toward zero at an incredible rate.

The Exploit: A Theory of Networks

by Alexander R. Galloway, Eugene Thacker

October 1st 2007 by Univ Of Minnesota Press

“The Exploit is that rare thing: a book with a clear grasp of how networks operate that also understands the political implications of this emerging form of power. It cuts through the nonsense about how ‘free’ and ‘democratic’ networks supposedly are, and it offers a rich analysis of how network protocols create a new kind of control. Essential reading for all theorists, artists, activists, techheads, and hackers of the Net.” —McKenzie Wark, author of A Hacker Manifesto

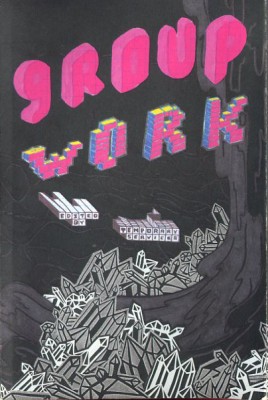

Group Work

by Temporary Services

New York, NY: Printed Matter. 2007

Based on a pamphlet published by Temporary Services in 2002 titled Group Work: A Compilation of Quotes About Collaboration from a Variety of Sources and Practices, this publication provides a multitude of perspectives on the theme of Group Work by practitioners of artistic group practice from 1960s to the present.