I’m doing a workshop / lecture as part of the ongoing Digital Scholars digital humanities discussion group here at Florida State University. Workshop is free and open to the public.

Wednesday, March 25, 2:00-3:30 pm

Fine Arts Building (FAB) 320A [530 W. Call St. map]

“I Know Where Your Cat Lives”: The Process of Mapping Big Data for Inconspicuous Trends

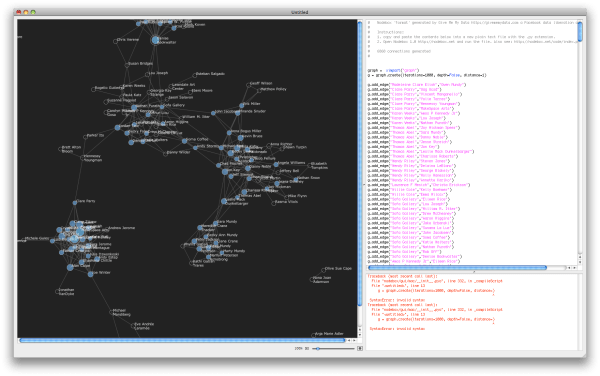

Big Data culture has its supporters and its skeptics, but it can have critical or aesthetic value even for those who are ambivalent. How is it possible, for example, to consider data as more than information — as the performance of particular behaviors, the practice of communal ideals, and the ethic motivating new media displays? Professor Owen Mundy from FSU’s College of Fine Arts invites us to take up these questions in a guided exploration of works of art that will highlight what he calls “inconspicuous trends.” Using the “I Know Where Your Cat Lives” project as a starting point, Professor Mundy will introduce us to the technical and design process for mapping big data in projects such as this one, showing us the various APIs (Application Program Interfaces) that are constructed to support them and considering the various ways we might want to visualize their results.

This session offers a hands-on demonstration and is designed with a low barrier of entry in mind. For those completely unfamiliar with APIs, this session will serve as a useful introduction, as Professor Mundy will walk us through the process of connecting to and retrieving live social media data from the Instagram API and rendering it using the Google Maps API. Participants should not worry if they do not have expertise in big data projects or are still learning the associated vocabulary. We come together to learn together, and all levels of skill will be accommodated, as will all attitudes and leanings. Desktop computers are installed in FAB 320A, but participants are welcome to bring their own laptops and wireless devices.

Participants are encouraged to read the following in advance of the meeting:

- Sepandar D. Kamvar and Jonathan Harris. “We Feel Fine and Searching the Emotional Web.” WSDM’11 (Web Search and Data Mining 2011) Feb. 9-12, 2011, Hong Kong, China. [http://wefeelfine.org/wefeelfine.pdf]

- Mitchell Whitelaw. “Art Against Information: Case Studies in Data Practice.” The Fibreculture Journal 11 (2008). [http://eleven.fibreculturejournal.org/fcj-067-art-against-information-case-studies-in-data-practice/]

- Owen Mundy. “The Unconscious Performance of Identity: A Review of Johannes P. Osterhoff’s ‘Google’.” Rhizome.org (Aug. 2012). [http://rhizome.org/editorial/2012/aug/22/unconscious-performance-identity-review-johannes-p/]

and to browse the following resources for press on Mundy’s project:

- “The Navy’s Acoustic Warfare, Anti-Surveillance Camouflage, And Erasing Your Metadata.” NHPR.org (Aug. 2014). Interview as part of New Hampshire Public Radio’s Word of Mouth (starts at 6:35) [http://nhpr.org/post/80514-navys-acoustic-warfare-anti-surveillance-camouflage-and-erasing-your-metadata]

- “This Guy Is Cyberstalking the World’s Cats in the Name of Privacy.”Motherboard/Vice Magazine (Jul. 2014). [http://motherboard.vice.com/read/this-guy-is-cyberstalking-the-worlds-cats-in-the-name-of-privacy]

- “What the Internet Can See From Your Cat Pictures.” The New York Times(22 Jul. 2014). [http://www.nytimes.com/2014/07/23/upshot/what-the-internet-can-see-from-your-cat-pictures.html?_r=0&abt=0002&abg=0]

For further (future) reading:

- Mark Tribe and Reena Jana. “Introduction” to wikibook New Media Art(Taschen, 2006-2012). [https://wiki.brown.edu/confluence/display/MarkTribe/New+Media+Art#NewMediaArt-Introduction]

You must be logged in to post a comment.