When it comes to personal data everyone’s first concern is usually privacy. But a lot of us want to share our data too, with friends, colleagues, and even complete strangers. While numbers have been used for centuries to improve the way we manufacture and do business, using them to quantify our personal lives is a recent phenomenon.

I’ve been thinking about this because one of my goals in creating Give Me My Data was to inspire others to reuse their data, and respond with images and objects they created. But I’m learning if you don’t know a programming language your choices are somewhat scattered and intimidating.

In a recent email exchange with Nicholas Felton, creator of daytum.com and other quality data products, I asked him what other user data sharing and/or visualization web applications he might have encountered while working on daytum.

Included in this article are the three apps he mentioned with my research plus nine additions of my own. All of the apps I mention help users access their own data to track, share, and/or visualize it either by recording it themselves or exporting it from another software. There’s a table at the end of the article to summarize and compare each.

Give Me My Data givememydata.com free

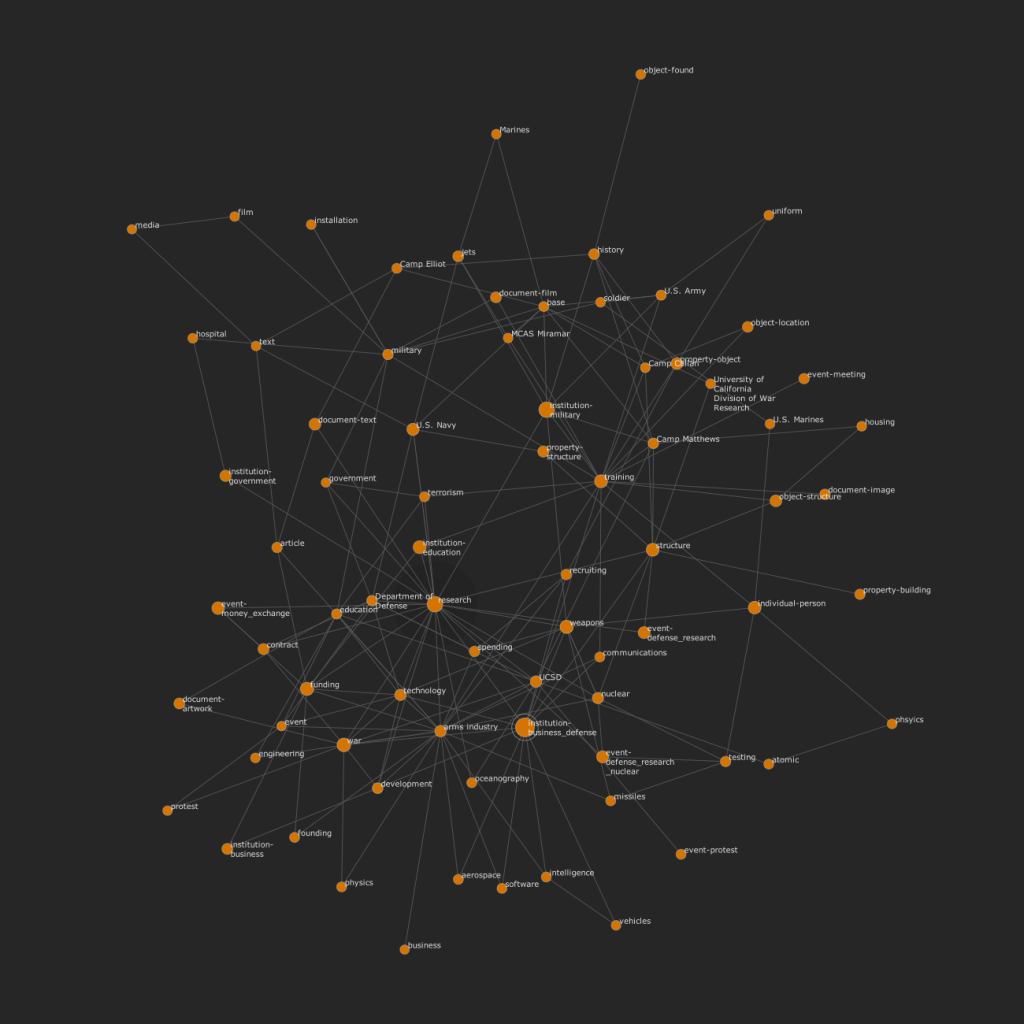

First, to give some context, Give Me My Data is a Facebook application that helps users export their data out of Facebook for reuse in visualizations, archives, or any possible method of digital storytelling. Data can be exported in common formats like CSV, XML, and JSON as well as customized network graph formats.

Status: operational, in-development

Daytum daytum.com free/$$

And to further contextualize, I’ll also address Daytum, an online app that allows users to collect, categorize, and share personal or other data. You can add any data that can be quantified or written down and organize and display it in many forms including bar and pie charts, plain text, and lists. There’s also a mobile site for quick submissions from your device or you can use their iphone app.

Status: operational, but not currently being developed

Geckoboard geckoboard.com $$

Geckoboard is a hosted real-time status board for all sorts of business (or personal) data. You can view web analytics, CRM, support, infrastructure, project management, etc., in one interface, on your computer or smart phone. To see data from other web services in your “dashboard” you add “widgets”—choose from a large list of APIs, give permissions, configure a variety of options, and see your data in a customized graph. Note though, this service is only for presenting data that is hosted elsewhere, and only in this interface. If you like looking at numbers all day, this is for you.

Status: operational

Track-n-Graph trackngraph.com free/$$

Track, graph, and share any information you can think of: your weight, gas mileage, coffee consumption, anything. The design is a little awkward, the graphs don’t display in Chrome or Safari (Mac), and as far as I can tell there’s no API, but the site seems very useful for storing and making simple graphs of your personal data. There are also various “templates” you can reuse to keep track of data like the Workout Tracker, which has fields for gender and age in addition to minutes you worked out, all of which are important in figuring other data (e.g. calories).

Status: operational

your.flowingdata.com your.flowingdata.com/ free

your.flowingdata lets you record your personal data with Twitter. With it you can collect, interact, customize views, and determine privacy by sending private tweets to your account. This project is created by Nathan Yau who writes Flowing Data and studies statistics at UCSD.

Status: operational, in-development

mycrocosm mycro.media.mit.edu free

Mycrocosm is a web service that allows you to track and share data and statistical graphs from the minutiae of daily life. Mycrocosm was developed by Yannick Assogba of the Sociable Media Group of the MIT Media Lab.

Status: operational, but not currently being developed

ManyEyes www-958.ibm.com free

ManyEyes is a project by the IBM Research and the IBM Cognos software group. On Many Eyes you can upload your own data and create visualizations, and view, discuss, and rate other’s visualizations and data sets. It is a great concept but it hasn’t evolved much since its original launch. In fact I’m finding the visualization technology has slowly devolved, leaving only about 20% of visualizations actually displaying (Chrome 12.0 on OSX 10.5.8 if folks are reading).

Status: operational

Fitbit fitbit.com $99.95

The Fitbit is a hardware device which tracks your motions and sleep throughout each day. This data can be uploaded and visualized on their website to realize information about your daily activities like calories burned, steps taken, distance traveled and sleep quality. The Fitbit contains a 3D motion sensor like the one found in the Nintendo Wii and plugs into a base station to upload the data.

Status: operational

Personal Google Search History google.com/history free

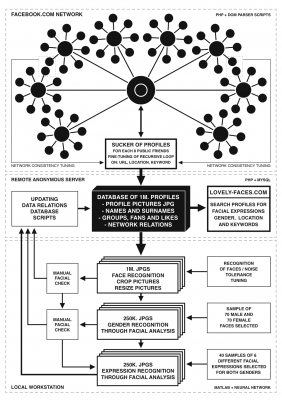

When I first saw this application on the Google site I was immediately alarmed. The amount of data they have collected is staggering; for example, “Total Google searches: 36323.” (since Jan 2006) This is a fantastic picture into the life of a user and what they are reading, watching, responding to. It’s like another, admittedly less manicured version, of Facebook. Instead of creating a profile, I am being profiled.

The privacy implications are serious here, which is probably why you have to login again to view it. It is also why a user’s search history draws the interest of interface artist, Johannes P. Osterhof, who is in the process of exploring the line between private and public data, as well as the even further-evaporated division between surveillance and social networks, in his one-year-long search history-made public project, simply titled, Google.

But, as everyone probably already knows, these big companies are making money and providing services. Google has the resources to take your privacy seriously. Well, kind of, because it mostly doesn’t fit into their business model to not track people.

Status: operational

Google Takeout google.com/takeout free

Speaking of funding, I’m quite impressed by this project. Google Takeout is developed by an engineering team at Google called the Data Liberation Front who take their jobs very seriously. In addition to their Google Takeout project, which allows you to export some of your data from Google, they have a really great website with current information about getting access to the data you store with Google.

Status: operational, in-development

gottaFeeling gottafeeling.com free/$$

gottaFeeling is an iphone application that allows you to track and share your feelings. It’s a simple concept, and while loaded down with a lot of rhetoric, reminds me of the amazing, “We Feel Fine.”

Status: in-development

BuzzData buzzdata.com unsure

Finally, I’ll end with BuzzData, a data-publishing platform that encourages the growth of communities around data. Not yet public, I’ve received a private taste of what this app will do, and it looks like it will be pretty cool. Think a mashup between Github and ManyEyes.

Status: still in-development, not public

So I’ll end with the table I created in my research. There are obviously many more types of ways to keep and manage data that I haven’t addressed here, but this is a good start. For further reading check out the Quantified Self blog/user community/conference created by Gary Wolf, who also authored, The Data-Driven Life, the New York Times article linked above.

|

| yes |

yes |

n/a |

no |

yes |

yes |

n/a |

no |

free |

none |

| yes |

yes |

yes |

yes |

$$ |

yes |

mobile site and iphone app |

no |

free / $4/ month |

free account limited by amount |

| no |

yes |

yes |

no |

yes |

no |

n/a |

only for viewing |

$9-$200

/ month |

number of users |

| yes |

yes |

yes |

yes |

yes |

no |

web-based |

no |

free / $25 per year |

free account limited by amount |

| yes |

yes |

yes |

yes |

yes |

yes |

via twitter |

via twitter |

free |

none |

| yes |

yes |

yes |

yes |

yes |

no |

web-based |

email-based |

free |

none |

| yes |

yes |

yes |

yes |

no |

yes |

no |

no |

free |

none |

| yes |

yes |

yes |

yes |

yes |

yes |

email |

??? |

??? |

??? |

| yes |

n/a |

yes |

yes |

yes |

yes |

yes |

no |

free |

none |

| yes |

yes |

n/a |

n/a |

yes |

yes |

n/a |

no |

free |

none |

| yes |

yes |

yes |

yes |

yes |

yes |

yes |

yes |

$100 / website is free |

free web account limited by amount |

| yes |

no |

no |

yes |

yes |

no |

iphone |

no |

free |

none |

| track/upload |

Can you track or upload your own data? |

| custom data types |

Does the a support custom data types? |

| visualize |

Can you create visualizations with the app? |

| publish |

Can you publish your data with the software? |

| privacy |

Are there options for keeping your data private while using the app? |

| export |

Can you export the data back out? |

| mobile upload |

Are there options to track or upload data from a device? |

| API |

Is there an Application Program Interface that allows you to write code to manage data? |

| price |

Is there a free version? |

| limits |

What limits are imposed on the free version? |

Update: Check out Google Guages and other Google Charts.